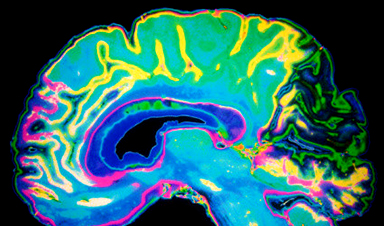

As artificial intelligence advances, its uses and capabilities in real-world applications continue to reach new heights that may even surpass human expertise. In the field of radiology, where a correct diagnosis is crucial to ensure proper patient care, large language models, such as ChatGPT, could improve accuracy or at least offer a good second opinion.

To test its potential, graduate student Yasuhito Mitsuyama and Associate Professor Daiju Ueda’s team at Osaka Metropolitan University’s Graduate School of Medicine led the researchers in comparing the diagnostic performance of GPT-4 based ChatGPT and radiologists on 150 preoperative brain tumor MRI reports. Based on these daily clinical notes written in Japanese, ChatGPT, two board-certified neuroradiologists, and three general radiologists were asked to provide differential diagnoses and a final diagnosis.

Subsequently, their accuracy was calculated based on the actual diagnosis of the tumor after its removal. The results stood at 73% for ChatGPT, a 72% average for neuroradiologists, and 68% average for general radiologists. Additionally, ChatGPT’s final diagnosis accuracy varied depending on whether the clinical report was written by a neuroradiologist or a general radiologist. The accuracy with neuroradiologist reports was 80%, compared to 60% when using general radiologist reports.

“These results suggest that ChatGPT can be useful for preoperative MRI diagnosis of brain tumors,” stated graduate student Mitsuyama.

In the future, we intend to study large language models in other diagnostic imaging fields with the aims of reducing the burden on physicians, improving diagnostic accuracy, and using AI to support educational environments.”

Yasuhito Mitsuyama, Osaka Metropolitan University

News

COVID-19 still claims more than 100,000 US lives each year

Centers for Disease Control and Prevention researchers report national estimates of 43.6 million COVID-19-associated illnesses and 101,300 deaths in the US during October 2022 to September 2023, plus 33.0 million illnesses and 100,800 deaths [...]

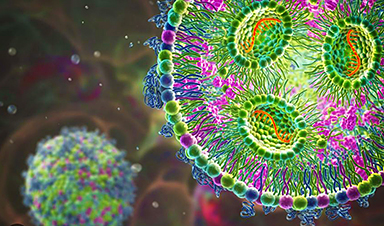

Nanomedicine in 2026: Experts Predict the Year Ahead

Progress in nanomedicine is almost as fast as the science is small. Over the last year, we've seen an abundance of headlines covering medical R&D at the nanoscale: polymer-coated nanoparticles targeting ovarian cancer, Albumin recruiting nanoparticles for [...]

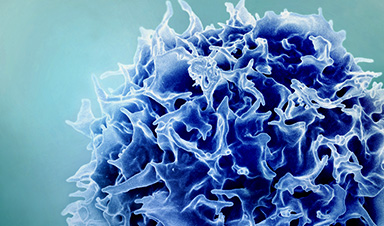

Lipid nanoparticles could unlock access for millions of autoimmune patients

Capstan Therapeutics scientists demonstrate that lipid nanoparticles can engineer CAR T cells within the body without laboratory cell manufacturing and ex vivo expansion. The method using targeted lipid nanoparticles (tLNPs) is designed to deliver [...]

The Brain’s Strange Way of Computing Could Explain Consciousness

Consciousness may emerge not from code, but from the way living brains physically compute. Discussions about consciousness often stall between two deeply rooted viewpoints. One is computational functionalism, which holds that cognition can be [...]

First breathing ‘lung-on-chip’ developed using genetically identical cells

Researchers at the Francis Crick Institute and AlveoliX have developed the first human lung-on-chip model using stem cells taken from only one person. These chips simulate breathing motions and lung disease in an individual, [...]

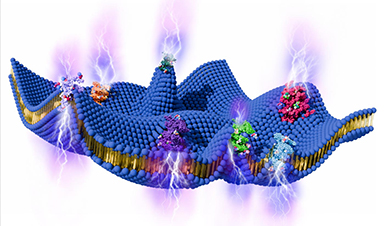

Cell Membranes May Act Like Tiny Power Generators

Living cells may generate electricity through the natural motion of their membranes. These fast electrical signals could play a role in how cells communicate and sense their surroundings. Scientists have proposed a new theoretical [...]

This Viral RNA Structure Could Lead to a Universal Antiviral Drug

Researchers identify a shared RNA-protein interaction that could lead to broad-spectrum antiviral treatments for enteroviruses. A new study from the University of Maryland, Baltimore County (UMBC), published in Nature Communications, explains how enteroviruses begin reproducing [...]

New study suggests a way to rejuvenate the immune system

Stimulating the liver to produce some of the signals of the thymus can reverse age-related declines in T-cell populations and enhance response to vaccination. As people age, their immune system function declines. T cell [...]

Nerve Damage Can Disrupt Immunity Across the Entire Body

A single nerve injury can quietly reshape the immune system across the entire body. Preclinical research from McGill University suggests that nerve injuries may lead to long-lasting changes in the immune system, and these [...]

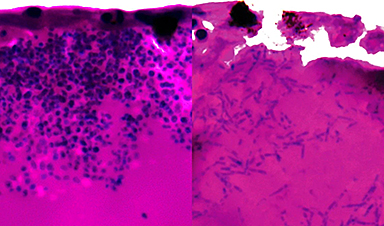

Fake Science Is Growing Faster Than Legitimate Research, New Study Warns

New research reveals organized networks linking paper mills, intermediaries, and compromised academic journals Organized scientific fraud is becoming increasingly common, ranging from fabricated research to the buying and selling of authorship and citations, according [...]

Scientists Unlock a New Way to Hear the Brain’s Hidden Language

Scientists can finally hear the brain’s quietest messages—unlocking the hidden code behind how neurons think, decide, and remember. Scientists have created a new protein that can capture the incoming chemical signals received by brain [...]

Does being infected or vaccinated first influence COVID-19 immunity?

A new study analyzing the immune response to COVID-19 in a Catalan cohort of health workers sheds light on an important question: does it matter whether a person was first infected or first vaccinated? [...]

We May Never Know if AI Is Conscious, Says Cambridge Philosopher

As claims about conscious AI grow louder, a Cambridge philosopher argues that we lack the evidence to know whether machines can truly be conscious, let alone morally significant. A philosopher at the University of [...]

AI Helped Scientists Stop a Virus With One Tiny Change

Using AI, researchers identified one tiny molecular interaction that viruses need to infect cells. Disrupting it stopped the virus before infection could begin. Washington State University scientists have uncovered a method to interfere with a key [...]

Deadly Hospital Fungus May Finally Have a Weakness

A deadly, drug-resistant hospital fungus may finally have a weakness—and scientists think they’ve found it. Researchers have identified a genetic process that could open the door to new treatments for a dangerous fungal infection [...]

Fever-Proof Bird Flu Variant Could Fuel the Next Pandemic

Bird flu viruses present a significant risk to humans because they can continue replicating at temperatures higher than a typical fever. Fever is one of the body’s main tools for slowing or stopping viral [...]