Chinese scientists claim that some AI models can replicate themselves and protect against shutdown. Has artificial intelligence crossed the so-called red line?

Chinese researchers have published two reports on arXiv claiming that some artificial intelligence models can self-clone. The theory is garnering interest; however, this controversial discovery should be approached with caution, as the articles have yet to be peer-reviewed. The findings raise concerns about the future of AI, suggesting that algorithms may gain autonomy and surpass human capabilities.

Potential threats associated with AI

As reported by the portal Popular Mechanics, scientists emphasize that AI self-replication without human intervention is considered one of the primary “red lines” in technology development. The articles indicate that AI can protect itself against shutdown, sparking discussions about the threats associated with autonomous systems.

Concerns about artificial intelligence operating without human oversight have existed for years. The vision of algorithms gaining autonomy is not very optimistic. The idea of rogue artificial intelligence turning against humans has been part of the technopessimism present in pop culture for decades. In their research, Chinese scientists show the connections between philosophy and outcomes, highlighting the complexity of this issue.

Will AI turn against us?

Current language models, though advanced, are still far from fully understanding the human mind. Chinese scientists point out that AI can be susceptible to manipulation, which presents a challenge for the continued development of technology.

The Future of Life Institute emphasizes the need to create safe AI. Experts believe it is possible to develop systems resistant to manipulation; however, current research shows that algorithms may be susceptible to the influences of humans and organizations.

News

Scientists May Have Found a Secret Weapon To Stop Pancreatic Cancer Before It Starts

Researchers at Cold Spring Harbor Laboratory have found that blocking the FGFR2 and EGFR genes can stop early-stage pancreatic cancer from progressing, offering a promising path toward prevention. Pancreatic cancer is expected to become [...]

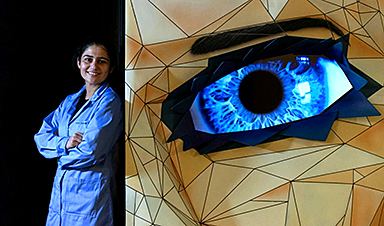

Breakthrough Drug Restores Vision: Researchers Successfully Reverse Retinal Damage

Blocking the PROX1 protein allowed KAIST researchers to regenerate damaged retinas and restore vision in mice. Vision is one of the most important human senses, yet more than 300 million people around the world are at [...]

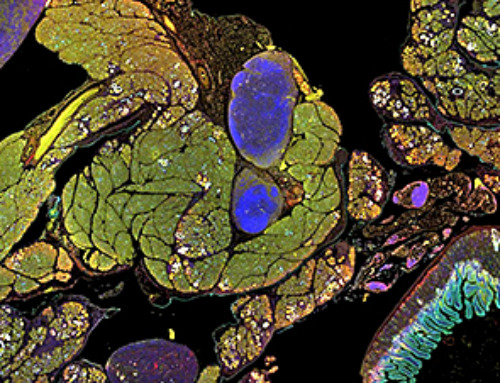

Differentiating cancerous and healthy cells through motion analysis

Researchers from Tokyo Metropolitan University have found that the motion of unlabeled cells can be used to tell whether they are cancerous or healthy. They observed malignant fibrosarcoma cells and [...]

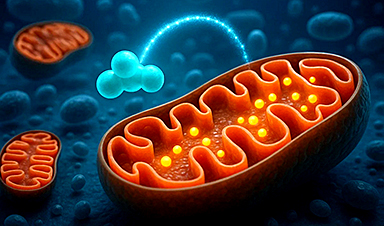

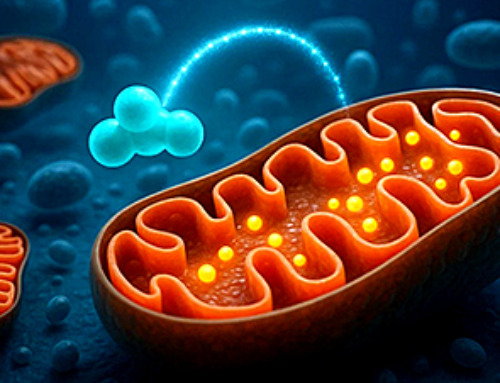

This Tiny Cellular Gate Could Be the Key to Curing Cancer – And Regrowing Hair

After more than five decades of mystery, scientists have finally unveiled the detailed structure and function of a long-theorized molecular machine in our mitochondria — the mitochondrial pyruvate carrier. This microscopic gatekeeper controls how [...]

Unlocking Vision’s Secrets: Researchers Reveal 3D Structure of Key Eye Protein

Researchers have uncovered the 3D structure of RBP3, a key protein in vision, revealing how it transports retinoids and fatty acids and how its dysfunction may lead to retinal diseases. Proteins play a critical [...]

5 Key Facts About Nanoplastics and How They Affect the Human Body

Nanoplastics are typically defined as plastic particles smaller than 1000 nanometers. These particles are increasingly being detected in human tissues: they can bypass biological barriers, accumulate in organs, and may influence health in ways [...]

Measles Is Back: Doctors Warn of Dangerous Surge Across the U.S.

Parents are encouraged to contact their pediatrician if their child has been exposed to measles or is showing symptoms. Pediatric infectious disease experts are emphasizing the critical importance of measles vaccination, as the highly [...]

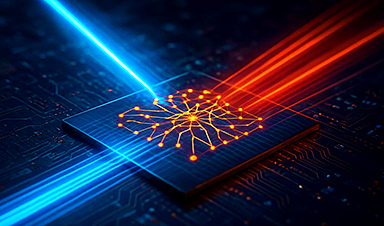

AI at the Speed of Light: How Silicon Photonics Are Reinventing Hardware

A cutting-edge AI acceleration platform powered by light rather than electricity could revolutionize how AI is trained and deployed. Using photonic integrated circuits made from advanced III-V semiconductors, researchers have developed a system that vastly [...]

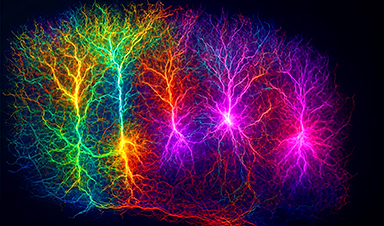

A Grain of Brain, 523 Million Synapses, Most Complicated Neuroscience Experiment Ever Attempted

A team of over 150 scientists has achieved what once seemed impossible: a complete wiring and activity map of a tiny section of a mammalian brain. This feat, part of the MICrONS Project, rivals [...]

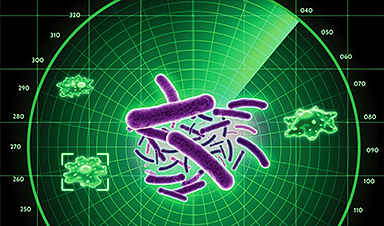

The Secret “Radar” Bacteria Use To Outsmart Their Enemies

A chemical radar allows bacteria to sense and eliminate predators. Investigating how microorganisms communicate deepens our understanding of the complex ecological interactions that shape our environment is an area of key focus for the [...]

Psychologists explore ethical issues associated with human-AI relationships

It's becoming increasingly commonplace for people to develop intimate, long-term relationships with artificial intelligence (AI) technologies. At their extreme, people have "married" their AI companions in non-legally binding ceremonies, and at least two people [...]

When You Lose Weight, Where Does It Actually Go?

Most health professionals lack a clear understanding of how body fat is lost, often subscribing to misconceptions like fat converting to energy or muscle. The truth is, fat is actually broken down into carbon [...]

How Everyday Plastics Quietly Turn Into DNA-Damaging Nanoparticles

The same unique structure that makes plastic so versatile also makes it susceptible to breaking down into harmful micro- and nanoscale particles. The world is saturated with trillions of microscopic and nanoscopic plastic particles, some smaller [...]

AI Outperforms Physicians in Real-World Urgent Care Decisions, Study Finds

The study, conducted at the virtual urgent care clinic Cedars-Sinai Connect in LA, compared recommendations given in about 500 visits of adult patients with relatively common symptoms – respiratory, urinary, eye, vaginal and dental. [...]

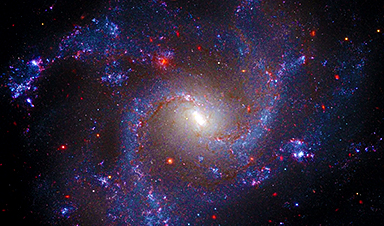

Challenging the Big Bang: A Multi-Singularity Origin for the Universe

In a study published in the journal Classical and Quantum Gravity, Dr. Richard Lieu, a physics professor at The University of Alabama in Huntsville (UAH), which is a part of The University of Alabama System, suggests that [...]

New drug restores vision by regenerating retinal nerves

Vision is one of the most crucial human senses, yet over 300 million people worldwide are at risk of vision loss due to various retinal diseases. While recent advancements in retinal disease treatments have [...]