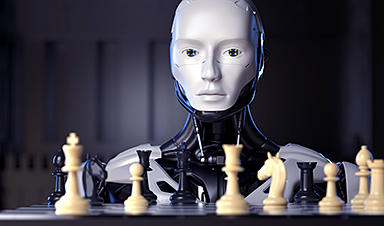

"System 0" represents an emerging cognitive tool powered by AI that works alongside human intuition and analysis to enhance cognitive abilities.

This new system promises to support complex decision-making and problem-solving but requires careful management to avoid overreliance and ensure ethical use.

Introducing System 0

The growing interaction between humans and artificial intelligence (AI) is shaping a new way of thinking, known as System 0. This new cognitive framework exists outside the human mind but has the potential to enhance our cognitive abilities. System 0 works alongside the two established models of human thought: System 1, which is fast, intuitive, and automatic, and System 2, which is slower, more analytical, and reflective.

However, System 0 introduces an additional layer of complexity, reshaping the cognitive landscape in which we operate. This could represent a significant leap forward in how we think and make decisions. It is essential that we use this progress to strengthen our cognitive autonomy, ensuring we do not become overly reliant on AI.

This groundbreaking concept is detailed today (October 22) in the journal Nature Human Behaviour in an article titled "The Case for Human-AI Interaction as System 0 Thinking." The article is authored by a team of researchers led by Professor Giuseppe Riva, director of the Humane Technology Lab at Università Cattolica's Milan campus and the Applied Technology for Neuropsychology Lab at Istituto Auxologico Italiano IRCCS in Milan, and Professor Mario Ubiali from Università Cattolica's Brescia campus. The study was conducted in collaboration with Massimo Chiriatti from Lenovo's Infrastructure Solutions Group in Milan, Professor Marianna Ganapini from Union College in Schenectady, New York, and Professor Enrico Panai from Università Cattolica's Milan campus.

External Cognitive Support Systems

Just as an external drive allows us to store data that are not present on the computer, we can work by connecting our drive to a PC wherever we are, artificial intelligence, with its galactic processing and data-handling capabilities, can represent an external circuit to the human brain capable of enhancing it. Hence the idea of System 0, which is essentially a form of "external" thinking that relies on the capabilities of AI.

By managing enormous amounts of data, AI can process information and provide suggestions or decisions based on complex algorithms. However, unlike intuitive or analytical thinking, System 0 does not assign intrinsic meaning to the information it processes. In other words, AI can perform calculations, make predictions, and generate responses without truly "understanding" the content of the data it works with.

Humans, therefore, have to interpret on their ones and giving meaning to the results produced by AI. It's like having an assistant that efficiently gathers, filters, and organizes information but still requires our intervention to make informed decisions. This cognitive support provides valuable input, but the final control must always remain in human hands.

The Risks of Overreliance on AI

"The risk," professors Riva and Ubiali emphasize, "is relying too much on System 0 without exercising critical thinking. If we passively accept the solutions offered by AI, we might lose our ability to think autonomously and develop innovative ideas. In an increasingly automated world, it is crucial that humans continue to question and challenge the results generated by AI," the experts stress.

Furthermore, transparency and trust in AI systems represent another major dilemma. How can we be sure that these systems are free from bias or distortion and that they provide accurate and reliable information? "The growing trend of using synthetic or artificially generated data could compromise our perception of reality and negatively influence our decision-making processes," the professors warn.

AI could even hijack our introspective abilities, they note—i.e., the act of reflecting on one's thoughts and feelings—a uniquely human process. However, with AI's advancement, it may become possible to rely on intelligent systems to analyze our behaviors and mental states. This raises the question: to what extent can we truly understand ourselves through AI analysis? And can AI replicate the complexity of subjective experience?

The Future of Cognitive Enhancement

Despite these questions, System 0 also offers enormous opportunities, the professors point out. Thanks to its ability to process complex data quickly and efficiently, AI can support humanity in tackling problems that exceed our natural cognitive capacities. Whether solving complex scientific issues, analyzing massive datasets, or managing intricate social systems, AI could become an indispensable ally.

To leverage the potential of System 0, the study's authors suggest it is urgent to develop ethical and responsible guidelines for its use. "Transparency, accountability, and digital literacy are key elements to enable people to critically interact with AI," they warn. "Educating the public on how to navigate this new cognitive environment will be crucial to avoid the risks of excessive dependence on these systems."

The Future of Human Thought

They conclude: If left unchecked, System 0 could interfere with human thinking in the future. "It is essential that we remain aware and critical in how we use it; the true potential of System 0 will depend on our ability to guide it in the right direction."

Reference: "The case for human–AI interaction as system 0 thinking" by Massimo Chiriatti, Marianna Ganapini, Enrico Panai, Mario Ubiali and Giuseppe Riva, 22 October 2024, Nature Human Behaviour.

DOI: 10.1038/s41562-024-01995-5

News

Scientists Melt Cancer’s Hidden “Power Hubs” and Stop Tumor Growth

Researchers discovered that in a rare kidney cancer, RNA builds droplet-like hubs that act as growth control centers inside tumor cells. By engineering a molecular switch to dissolve these hubs, they were able to halt cancer [...]

Platelet-inspired nanoparticles could improve treatment of inflammatory diseases

Scientists have developed platelet-inspired nanoparticles that deliver anti-inflammatory drugs directly to brain-computer interface implants, doubling their effectiveness. Scientists have found a way to improve the performance of brain-computer interface (BCI) electrodes by delivering anti-inflammatory drugs directly [...]

After 150 years, a new chapter in cancer therapy is finally beginning

For decades, researchers have been looking for ways to destroy cancer cells in a targeted manner without further weakening the body. But for many patients whose immune system is severely impaired by chemotherapy or radiation, [...]

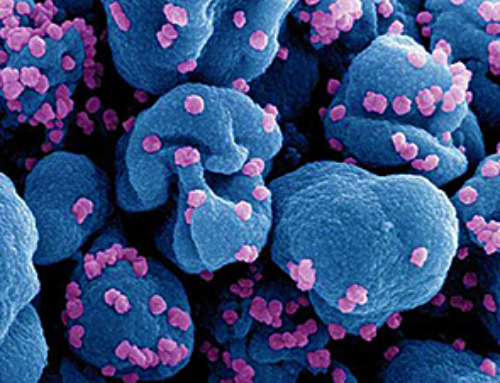

Older chemical libraries show promise for fighting resistant strains of COVID-19 virus

SARS‑CoV‑2, the virus that causes COVID-19, continues to mutate, with some newer strains becoming less responsive to current antiviral treatments like Paxlovid. Now, University of California San Diego scientists and an international team of [...]

Lower doses of immunotherapy for skin cancer give better results, study suggests

According to a new study, lower doses of approved immunotherapy for malignant melanoma can give better results against tumors, while reducing side effects. This is reported by researchers at Karolinska Institutet in the Journal of the National [...]

Researchers highlight five pathways through which microplastics can harm the brain

Microplastics could be fueling neurodegenerative diseases like Alzheimer's and Parkinson's, with a new study highlighting five ways microplastics can trigger inflammation and damage in the brain. More than 57 million people live with dementia, [...]

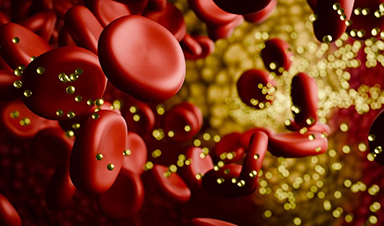

Tiny Metal Nanodots Obliterate Cancer Cells While Largely Sparing Healthy Tissue

Scientists have developed tiny metal-oxide particles that push cancer cells past their stress limits while sparing healthy tissue. An international team led by RMIT University has developed tiny particles called nanodots, crafted from a metallic compound, [...]

Gold Nanoclusters Could Supercharge Quantum Computers

Researchers found that gold “super atoms” can behave like the atoms in top-tier quantum systems—only far easier to scale. These tiny clusters can be customized at the molecular level, offering a powerful, tunable foundation [...]

A single shot of HPV vaccine may be enough to fight cervical cancer, study finds

WASHINGTON -- A single HPV vaccination appears just as effective as two doses at preventing the viral infection that causes cervical cancer, researchers reported Wednesday. HPV, or human papillomavirus, is very common and spread [...]

New technique overcomes technological barrier in 3D brain imaging

Scientists at the Swiss Light Source SLS have succeeded in mapping a piece of brain tissue in 3D at unprecedented resolution using X-rays, non-destructively. The breakthrough overcomes a long-standing technological barrier that had limited [...]

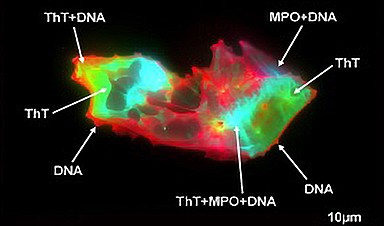

Scientists Uncover Hidden Blood Pattern in Long COVID

Researchers found persistent microclot and NET structures in Long COVID blood that may explain long-lasting symptoms. Researchers examining Long COVID have identified a structural connection between circulating microclots and neutrophil extracellular traps (NETs). The [...]

This Cellular Trick Helps Cancer Spread, but Could Also Stop It

Groups of normal cbiells can sense far into their surroundings, helping explain cancer cell migration. Understanding this ability could lead to new ways to limit tumor spread. The tale of the princess and the [...]

New mRNA therapy targets drug-resistant pneumonia

Bacteria that multiply on surfaces are a major headache in health care when they gain a foothold on, for example, implants or in catheters. Researchers at Chalmers University of Technology in Sweden have found [...]

Current Heart Health Guidelines Are Failing To Catch a Deadly Genetic Killer

New research reveals that standard screening misses most people with a common inherited cholesterol disorder. A Mayo Clinic study reports that current genetic screening guidelines overlook most people who have familial hypercholesterolemia, an inherited disorder that [...]

Scientists Identify the Evolutionary “Purpose” of Consciousness

Summary: Researchers at Ruhr University Bochum explore why consciousness evolved and why different species developed it in distinct ways. By comparing humans with birds, they show that complex awareness may arise through different neural architectures yet [...]

Novel mRNA therapy curbs antibiotic-resistant infections in preclinical lung models

Researchers at the Icahn School of Medicine at Mount Sinai and collaborators have reported early success with a novel mRNA-based therapy designed to combat antibiotic-resistant bacteria. The findings, published in Nature Biotechnology, show that in [...]