Does the advent of machine learning mean the classic methodology of hypothesize, predict and test has had its day?

Isaac Newton apocryphally discovered his second law – the one about gravity – after an apple fell on his head. Much experimentation and data analysis later, he realised there was a fundamental relationship between force, mass and acceleration. He formulated a theory to describe that relationship – one that could be expressed as an equation, F=ma – and used it to predict the behaviour of objects other than apples. His predictions turned out to be right (if not always precise enough for those who came later).

Contrast how science is increasingly done today. Facebook’s machine learning tools predict your preferences better than any psychologist. AlphaFold, a program built by DeepMind, has produced the most accurate predictions yet of protein structures based on the amino acids they contain. Both are completely silent on why they work: why you prefer this or that information; why this sequence generates that structure.

You can’t lift a curtain and peer into the mechanism. They offer up no explanation, no set of rules for converting this into that – no theory, in a word. They just work and do so well. We witness the social effects of Facebook’s predictions daily. AlphaFold has yet to make its impact felt, but many are convinced it will change medicine.

Somewhere between Newton and Mark Zuckerberg, theory took a back seat. In 2008, Chris Anderson, the then editor-in-chief of Wired magazine, predicted its demise. So much data had accumulated, he argued, and computers were already so much better than us at finding relationships within it, that our theories were being exposed for what they were – oversimplifications of reality. Soon, the old scientific method – hypothesize, predict, test – would be relegated to the dustbin of history. We’d stop looking for the causes of things and be satisfied with correlations.

With the benefit of hindsight, we can say that what Anderson saw is true (he wasn’t alone). The complexity that this wealth of data has revealed to us cannot be captured by theory as traditionally understood. “We have leapfrogged over our ability to even write the theories that are going to be useful for description,” says computational neuroscientist Peter Dayan, director of the Max Planck Institute for Biological Cybernetics in Tübingen, Germany. “We don’t even know what they would look like.”

But Anderson’s prediction of the end of theory looks to have been premature – or maybe his thesis was itself an oversimplification. There are several reasons why theory refuses to die, despite the successes of such theory-free prediction engines as Facebook and AlphaFold. All are illuminating, because they force us to ask: what’s the best way to acquire knowledge and where does science go from here?

The first reason is that we’ve realised that artificial intelligences (AIs), particularly a form of machine learning called neural networks, which learn from data without having to be fed explicit instructions, are themselves fallible. Think of the prejudice that has been documented in Google’s search engines and Amazon’s hiring tools.

The second is that humans turn out to be deeply uncomfortable with theory-free science. We don’t like dealing with a black box – we want to know why.

And third, there may still be plenty of theory of the traditional kind – that is, graspable by humans – that usefully explains much but has yet to be uncovered.

So theory isn’t dead, yet, but it is changing – perhaps beyond recognition. “The theories that make sense when you have huge amounts of data look quite different from those that make sense when you have small amounts,” says Tom Griffiths, a psychologist at Princeton University.

Griffiths has been using neural nets to help him improve on existing theories in his domain, which is human decision-making. A popular theory of how people make decisions when economic risk is involved is prospect theory, which was formulated by behavioural economists Daniel Kahneman and Amos Tversky in the 1970s (it later won Kahneman a Nobel prize). The idea at its core is that people are sometimes, but not always, rational…..

News

Treating a Common Dental Infection… Effects That Extend Far Beyond the Mouth

Successful root canal treatment may help lower inflammation associated with heart disease and improve blood sugar and cholesterol levels. Treating an infected tooth with a successful root canal procedure may do more than relieve [...]

Microplastics found in prostate tumors in small study

In a new study, researchers found microplastics deep inside prostate cancer tumors, raising more questions about the role the ubiquitous pollutants play in public health. The findings — which come from a small study of 10 [...]

All blue-eyed people have this one thing in common

All Blue-Eyed People Have This One Thing In Common Blue Eyes Aren’t Random—Research Traces Them Back to One Prehistoric Human It sounds like a myth at first — something you’d hear in a folklore [...]

Scientists reveal how exercise protects the brain from Alzheimer’s

Researchers at UC San Francisco have identified a biological process that may explain why exercise sharpens thinking and memory. Their findings suggest that physical activity strengthens the brain's built in defense system, helping protect [...]

NanoMedical Brain/Cloud Interface – Explorations and Implications. A new book from Frank Boehm

New book from Frank Boehm, NanoappsMedical Inc Founder: This book explores the future hypothetical possibility that the cerebral cortex of the human brain might be seamlessly, safely, and securely connected with the Cloud via [...]

Deadly Pancreatic Cancer Found To “Wire Itself” Into the Body’s Nerves

A newly discovered link between pancreatic cancer and neural signaling reveals a promising drug target that slows tumor growth by blocking glutamate uptake. Pancreatic cancer is among the most deadly cancers, and scientists are [...]

This Simple Brain Exercise May Protect Against Dementia for 20 Years

A long-running study following thousands of older adults suggests that a relatively brief period of targeted brain training may have effects that last decades. Starting in the late 1990s, close to 3,000 older adults [...]

Scientists Crack a 50-Year Tissue Mystery With Major Cancer Implications

Researchers have resolved a 50-year-old scientific mystery by identifying the molecular mechanism that allows tissues to regenerate after severe damage. The discovery could help guide future treatments aimed at reducing the risk of cancer [...]

This New Blood Test Can Detect Cancer Before Tumors Appear

A new CRISPR-powered light sensor can detect the faintest whispers of cancer in a single drop of blood. Scientists have created an advanced light-based sensor capable of identifying extremely small amounts of cancer biomarkers [...]

Blindness Breakthrough? This Snail Regrows Eyes in 30 Days

A snail that regrows its eyes may hold the genetic clues to restoring human sight. Human eyes are intricate organs that cannot regrow once damaged. Surprisingly, they share key structural features with the eyes [...]

This Is Why the Same Virus Hits People So Differently

Scientists have mapped how genetics and life experiences leave lasting epigenetic marks on immune cells. The discovery helps explain why people respond so differently to the same infections and could lead to more personalized [...]

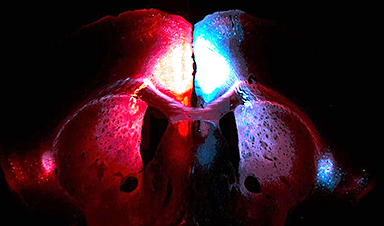

Rejuvenating neurons restores learning and memory in mice

EPFL scientists report that briefly switching on three “reprogramming” genes in a small set of memory-trace neurons restored memory in aged mice and in mouse models of Alzheimer’s disease to level of healthy young [...]

New book from Nanoappsmedical Inc. – Global Health Care Equivalency

A new book by Frank Boehm, NanoappsMedical Inc. Founder. This groundbreaking volume explores the vision of a Global Health Care Equivalency (GHCE) system powered by artificial intelligence and quantum computing technologies, operating on secure [...]

New Molecule Blocks Deadliest Brain Cancer at Its Genetic Root

Researchers have identified a molecule that disrupts a critical gene in glioblastoma. Scientists at the UVA Comprehensive Cancer Center say they have found a small molecule that can shut down a gene tied to glioblastoma, a [...]

Scientists Finally Solve a 30-Year-Old Cancer Mystery Hidden in Rye Pollen

Nearly 30 years after rye pollen molecules were shown to slow tumor growth in animals, scientists have finally determined their exact three-dimensional structures. Nearly 30 years ago, researchers noticed something surprising in rye pollen: [...]

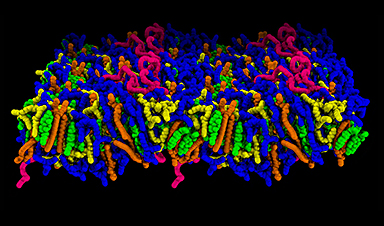

How lipid nanoparticles carrying vaccines release their cargo

A study from FAU has shown that lipid nanoparticles restructure their membrane significantly after being absorbed into a cell and ending up in an acidic environment. Vaccines and other medicines are often packed in [...]