It’s becoming increasingly commonplace for people to develop intimate, long-term relationships with artificial intelligence (AI) technologies. At their extreme, people have “married” their AI companions in non-legally binding ceremonies, and at least two people have killed themselves following AI chatbot advice. In an opinion paper publishing April 11 in the Cell Press journal Trends in Cognitive Sciences, psychologists explore ethical issues associated with human-AI relationships, including their potential to disrupt human-human relationships and give harmful advice.

“The ability for AI to now act like a human and enter into long-term communications really opens up a new can of worms,” says lead author Daniel B. Shank of Missouri University of Science & Technology, who specializes in social psychology and technology. “If people are engaging in romance with machines, we really need psychologists and social scientists involved.”

AI romance or companionship is more than a one-off conversation, note the authors. Through weeks and months of intense conversations, these AIs can become trusted companions who seem to know and care about their human partners. And because these relationships can seem easier than human-human relationships, the researchers argue that AIs could interfere with human social dynamics.

A real worry is that people might bring expectations from their AI relationships to their human relationships. Certainly, in individual cases it’s disrupting human relationships, but it’s unclear whether that’s going to be widespread.”

Daniel B. Shank, lead author, Missouri University of Science & Technology

There’s also the concern that AIs can offer harmful advice. Given AIs’ predilection to hallucinate (i.e., fabricate information) and churn up pre-existing biases, even short-term conversations with AIs can be misleading, but this can be more problematic in long-term AI relationships, the researchers say.

“With relational AIs, the issue is that this is an entity that people feel they can trust: it’s ‘someone’ that has shown they care and that seems to know the person in a deep way, and we assume that ‘someone’ who knows us better is going to give better advice,” says Shank. “If we start thinking of an AI that way, we’re going to start believing that they have our best interests in mind, when in fact, they could be fabricating things or advising us in really bad ways.”

The suicides are an extreme example of this negative influence, but the researchers say that these close human-AI relationships could also open people up to manipulation, exploitation, and fraud.

News

The Secret “Radar” Bacteria Use To Outsmart Their Enemies

A chemical radar allows bacteria to sense and eliminate predators. Investigating how microorganisms communicate deepens our understanding of the complex ecological interactions that shape our environment is an area of key focus for the [...]

Psychologists explore ethical issues associated with human-AI relationships

It's becoming increasingly commonplace for people to develop intimate, long-term relationships with artificial intelligence (AI) technologies. At their extreme, people have "married" their AI companions in non-legally binding ceremonies, and at least two people [...]

When You Lose Weight, Where Does It Actually Go?

Most health professionals lack a clear understanding of how body fat is lost, often subscribing to misconceptions like fat converting to energy or muscle. The truth is, fat is actually broken down into carbon [...]

How Everyday Plastics Quietly Turn Into DNA-Damaging Nanoparticles

The same unique structure that makes plastic so versatile also makes it susceptible to breaking down into harmful micro- and nanoscale particles. The world is saturated with trillions of microscopic and nanoscopic plastic particles, some smaller [...]

AI Outperforms Physicians in Real-World Urgent Care Decisions, Study Finds

The study, conducted at the virtual urgent care clinic Cedars-Sinai Connect in LA, compared recommendations given in about 500 visits of adult patients with relatively common symptoms – respiratory, urinary, eye, vaginal and dental. [...]

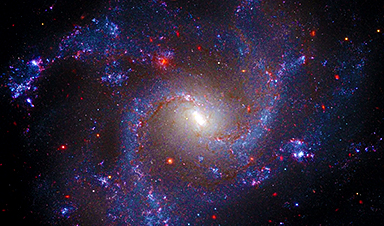

Challenging the Big Bang: A Multi-Singularity Origin for the Universe

In a study published in the journal Classical and Quantum Gravity, Dr. Richard Lieu, a physics professor at The University of Alabama in Huntsville (UAH), which is a part of The University of Alabama System, suggests that [...]

New drug restores vision by regenerating retinal nerves

Vision is one of the most crucial human senses, yet over 300 million people worldwide are at risk of vision loss due to various retinal diseases. While recent advancements in retinal disease treatments have [...]

Shingles vaccine cuts dementia risk by 20%, new study shows

A shingles shot may do more than prevent rash — it could help shield the aging brain from dementia, according to a landmark study using real-world data from the UK. A routine vaccine could [...]

AI Predicts Sudden Cardiac Arrest Days Before It Strikes

AI can now predict deadly heart arrhythmias up to two weeks in advance, potentially transforming cardiac care. Artificial intelligence could play a key role in preventing many cases of sudden cardiac death, according to [...]

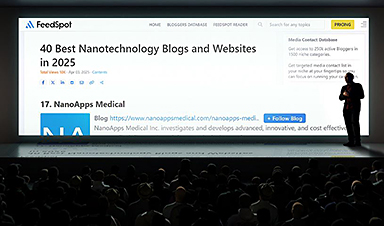

NanoApps Medical is a Top 20 Feedspot Nanotech Blog

There is an ocean of Nanotechnology news published every day. Feedspot saves us a lot of time and we recommend it. We have been using it since 2018. Feedspot is a freemium online RSS [...]

This Startup Says It Can Clean Your Blood of Microplastics

This is a non-exhaustive list of places microplastics have been found: Mount Everest, the Mariana Trench, Antarctic snow, clouds, plankton, turtles, whales, cattle, birds, tap water, beer, salt, human placentas, semen, breast milk, feces, testicles, [...]

New Blood Test Detects Alzheimer’s and Tracks Its Progression With 92% Accuracy

The new test could help identify which patients are most likely to benefit from new Alzheimer’s drugs. A newly developed blood test for Alzheimer’s disease not only helps confirm the presence of the condition but also [...]

The CDC buried a measles forecast that stressed the need for vaccinations

This story was originally published on ProPublica, a nonprofit newsroom that investigates abuses of power. Sign up to receive our biggest stories as soon as they’re published. ProPublica — Leaders at the Centers for Disease Control and Prevention [...]

Light-Driven Plasmonic Microrobots for Nanoparticle Manipulation

A recent study published in Nature Communications presents a new microrobotic platform designed to improve the precision and versatility of nanoparticle manipulation using light. Led by Jin Qin and colleagues, the research addresses limitations in traditional [...]

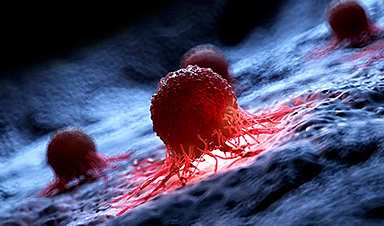

Cancer’s “Master Switch” Blocked for Good in Landmark Study

Researchers discovered peptides that permanently block a key cancer protein once thought untreatable, using a new screening method to test their effectiveness inside cells. For the first time, scientists have identified promising drug candidates [...]

AI self-cloning claims: A new frontier or a looming threat?

Chinese scientists claim that some AI models can replicate themselves and protect against shutdown. Has artificial intelligence crossed the so-called red line? Chinese researchers have published two reports on arXiv claiming that some artificial [...]