Highlighting the absence of evidence for the controllability of AI, Dr. Yampolskiy warns of the existential risks involved and advocates for a cautious approach to AI development, with a focus on safety and risk minimization.

There is no current evidence that AI can be controlled safely, according to an extensive review, and without proof that AI can be controlled, it should not be developed, a researcher warns.

Despite the recognition that the problem of AI control may be one of the most important problems facing humanity, it remains poorly understood, poorly defined, and poorly researched, Dr. Roman V. Yampolskiy explains.

In his upcoming book, AI: Unexplainable, Unpredictable, Uncontrollable, AI Safety expert Dr. Yampolskiy looks at the ways that AI has the potential to dramatically reshape society, not always to our advantage.

He explains: “We are facing an almost guaranteed event with the potential to cause an existential catastrophe. No wonder many consider this to be the most important problem humanity has ever faced. The outcome could be prosperity or extinction, and the fate of the universe hangs in the balance.”

Uncontrollable superintelligence

Dr. Yampolskiy has carried out an extensive review of AI scientific literature and states he has found no proof that AI can be safely controlled – and even if there are some partial controls, they would not be enough.

He explains: “Why do so many researchers assume that AI control problem is solvable? To the best of our knowledge, there is no evidence for that, no proof. Before embarking on a quest to build a controlled AI, it is important to show that the problem is solvable.

“This, combined with statistics that show the development of AI superintelligence is an almost guaranteed event, shows we should be supporting a significant AI safety effort.”

He argues our ability to produce intelligent software far outstrips our ability to control or even verify it. After a comprehensive literature review, he suggests advanced intelligent systems can never be fully controllable and so will always present a certain level of risk regardless of the benefit they provide. He believes it should be the goal of the AI community to minimize such risk while maximizing potential benefits.

What are the obstacles?

AI (and superintelligence), differ from other programs by its ability to learn new behaviors, adjust its performance, and act semi-autonomously in novel situations.

One issue with making AI ‘safe’ is that the possible decisions and failures by a superintelligent being as it becomes more capable is infinite, so there are an infinite number of safety issues. Simply predicting the issues not be possible and mitigating against them in security patches may not be enough.

At the same time, Yampolskiy explains, AI cannot explain what it has decided, and/or we cannot understand the explanation given as humans are not smart enough to understand the concepts implemented. If we do not understand AI’s decisions and we only have a ‘black box’, we cannot understand the problem and reduce the likelihood of future accidents.

For example, AI systems are already being tasked with making decisions in healthcare, investing, employment, banking and security, to name a few. Such systems should be able to explain how they arrived at their decisions, particularly to show that they are bias-free.

Yampolskiy explains: “If we grow accustomed to accepting AI’s answers without an explanation, essentially treating it as an Oracle system, we would not be able to tell if it begins providing wrong or manipulative answers.”

Controlling the uncontrollable

As the capability of AI increases, its autonomy also increases but our control over it decreases, Yampolskiy explains, and increased autonomy is synonymous with decreased safety.

For example, for superintelligence to avoid acquiring inaccurate knowledge and remove all bias from its programmers, it could ignore all such knowledge and rediscover/proof everything from scratch, but that would also remove any pro-human bias.

“Less intelligent agents (people) can’t permanently control more intelligent agents (ASIs). This is not because we may fail to find a safe design for superintelligence in the vast space of all possible designs, it is because no such design is possible, it doesn’t exist. Superintelligence is not rebelling, it is uncontrollable to begin with,” he explains.

“Humanity is facing a choice, do we become like babies, taken care of but not in control or do we reject having a helpful guardian but remain in charge and free.”

He suggests that an equilibrium point could be found at which we sacrifice some capability in return for some control, at the cost of providing system with a certain degree of autonomy.

Aligning human values

One control suggestion is to design a machine that precisely follows human orders, but Yampolskiy points out the potential for conflicting orders, misinterpretation or malicious use.

He explains: “Humans in control can result in contradictory or explicitly malevolent orders, while AI in control means that humans are not.”

If AI acted more as an advisor it could bypass issues with misinterpretation of direct orders and potential for malevolent orders, but the author argues for AI to be a useful advisor it must have its own superior values.

“Most AI safety researchers are looking for a way to align future superintelligence to the values of humanity. Value-aligned AI will be biased by definition, pro-human bias, good or bad is still a bias. The paradox of value-aligned AI is that a person explicitly ordering an AI system to do something may get a “no” while the system tries to do what the person actually wants. Humanity is either protected or respected, but not both,” he explains.

Minimizing risk

To minimize the risk of AI, he says it needs it to be modifiable with ‘undo’ options, limitable, transparent, and easy to understand in human language.

He suggests all AI should be categorized as controllable or uncontrollable, and nothing should be taken off the table and limited moratoriums, and even partial bans on certain types of AI technology should be considered.

Instead of being discouraged, he says: “Rather it is a reason, for more people, to dig deeper and to increase effort, and funding for AI Safety and Security research. We may not ever get to 100% safe AI, but we can make AI safer in proportion to our efforts, which is a lot better than doing nothing. We need to use this opportunity wisely.”

News

How the FDA opens the door to risky chemicals in America’s food supply

Lining the shelves of American supermarkets are food products with chemicals linked to health concerns. To a great extent, the FDA allows food companies to determine for themselves whether their ingredients and additives are [...]

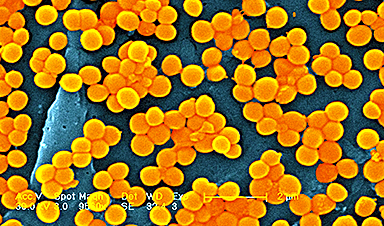

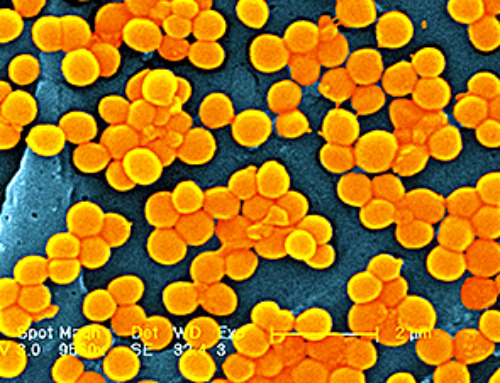

Superbug crisis could get worse, killing nearly 40 million people by 2050

The number of lives lost around the world due to infections that are resistant to the medications intended to treat them could increase nearly 70% by 2050, a new study projects, further showing the [...]

How Can Nanomaterials Be Programmed for Different Applications?

Nanomaterials are no longer just small—they are becoming smart. Across fields like medicine, electronics, energy, and materials science, researchers are now programming nanomaterials to behave in intentional, responsive ways. These advanced materials are designed [...]

Microplastics Are Invading Our Arteries, and It Could Be Increasing Your Risk of Stroke

Higher levels of micronanoplastics were found in carotid artery plaque, especially in people with stroke symptoms, suggesting a potential new risk factor. People with plaque buildup in the arteries of their neck have been [...]

Gene-editing therapy shows early success in fighting advanced gastrointestinal cancers

Researchers at the University of Minnesota have completed a first-in-human clinical trial testing a CRISPR/Cas9 gene-editing technique to help the immune system fight advanced gastrointestinal (GI) cancers. The results, recently published in The Lancet Oncology, show encouraging [...]

Engineered extracellular vesicles facilitate delivery of advanced medicines

Graphic abstract of the development of VEDIC and VFIC systems for high efficiency intracellular protein delivery in vitro and in vivo. Credit: Nature Communications (2025). DOI: 10.1038/s41467-025-59377-y. https://www.nature.com/articles/s41467-025-59377-y Researchers at Karolinska Institutet have developed a technique [...]

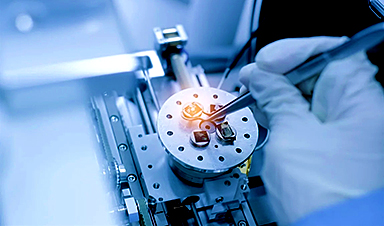

Brain-computer interface allows paralyzed users to customize their sense of touch

University of Pittsburgh School of Medicine scientists are one step closer to developing a brain-computer interface, or BCI, that allows people with tetraplegia to restore their lost sense of touch. While exploring a digitally [...]

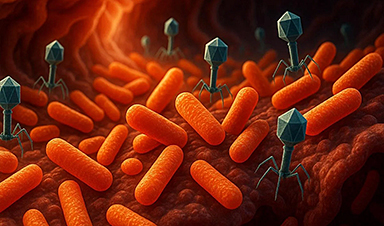

Scientists Flip a Gut Virus “Kill Switch” – Expose a Hidden Threat in Antibiotic Treatment

Scientists have long known that bacteriophages, viruses that infect bacteria, live in our gut, but exactly what they do has remained elusive. Researchers developed a clever mouse model that can temporarily eliminate these phages [...]

Enhanced Antibacterial Polylactic Acid-Curcumin Nanofibers for Wound Dressing

Background Wound healing is a complex physiological process that can be compromised by infection and impaired tissue regeneration. Conventional dressings, typically made from natural fibers such as cotton or linen, offer limited functionality. Nanofiber [...]

Global Nanomaterial Regulation: A Country-by-Country Comparison

Nanomaterials are materials with at least one dimension smaller than 100 nanometres (about 100,000 times thinner than a human hair). Because of their tiny size, they have unique properties that can be useful in [...]

Pandemic Potential: Scientists Discover 3 Hotspots of Deadly Emerging Disease in the US

Virginia Tech researchers discovered six new rodent carriers of hantavirus and identified U.S. hotspots, highlighting the virus’s adaptability and the impact of climate and ecology on its spread. Hantavirus recently drew public attention following reports [...]

Studies detail high rates of long COVID among healthcare, dental workers

Researchers have estimated approximately 8% of Americas have ever experienced long COVID, or lasting symptoms, following an acute COVID-19 infection. Now two recent international studies suggest that the percentage is much higher among healthcare workers [...]

Melting Arctic Ice May Unleash Ancient Deadly Diseases, Scientists Warn

Melting Arctic ice increases human and animal interactions, raising the risk of infectious disease spread. Researchers urge early intervention and surveillance. Climate change is opening new pathways for the spread of infectious diseases such [...]

Scientists May Have Found a Secret Weapon To Stop Pancreatic Cancer Before It Starts

Researchers at Cold Spring Harbor Laboratory have found that blocking the FGFR2 and EGFR genes can stop early-stage pancreatic cancer from progressing, offering a promising path toward prevention. Pancreatic cancer is expected to become [...]

Breakthrough Drug Restores Vision: Researchers Successfully Reverse Retinal Damage

Blocking the PROX1 protein allowed KAIST researchers to regenerate damaged retinas and restore vision in mice. Vision is one of the most important human senses, yet more than 300 million people around the world are at [...]

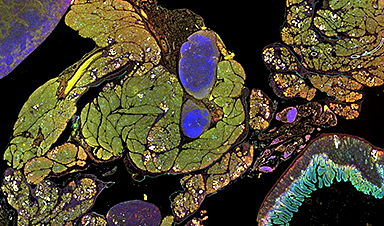

Differentiating cancerous and healthy cells through motion analysis

Researchers from Tokyo Metropolitan University have found that the motion of unlabeled cells can be used to tell whether they are cancerous or healthy. They observed malignant fibrosarcoma [...]