MIT researchers have introduced an efficient reinforcement learning algorithm that enhances AI’s decision-making in complex scenarios, such as city traffic control.

By strategically selecting optimal tasks for training, the algorithm achieves significantly improved performance with far less data, offering a 50x boost in efficiency. This method not only saves time and resources but also paves the way for more effective AI applications in real-world settings.

AI Decision-Making

Across fields like robotics, medicine, and political science, researchers are working to train AI systems to make meaningful and impactful decisions. For instance, an AI system designed to manage traffic in a congested city could help drivers reach their destinations more quickly while enhancing safety and sustainability.

However, teaching AI to make effective decisions is a complex challenge.

Challenges in Reinforcement Learning

Reinforcement learning models, the foundation of many AI decision-making systems, often struggle when confronted with even slight changes in the tasks they are trained for. For example, in traffic management, a model might falter when handling intersections with varying speed limits, lane configurations, or traffic patterns.

To boost the reliability of reinforcement learning models for complex tasks with variability, MIT researchers have introduced a more efficient algorithm for training them.

Strategic Task Selection in AI Training

The algorithm strategically selects the best tasks for training an AI agent so it can effectively perform all tasks in a collection of related tasks. In the case of traffic signal control, each task could be one intersection in a task space that includes all intersections in the city.

By focusing on a smaller number of intersections that contribute the most to the algorithm’s overall effectiveness, this method maximizes performance while keeping the training cost low.

Enhancing AI Efficiency With a Simple Algorithm

The researchers found that their technique was between five and 50 times more efficient than standard approaches on an array of simulated tasks. This gain in efficiency helps the algorithm learn a better solution in a faster manner, ultimately improving the performance of the AI agent.

“We were able to see incredible performance improvements, with a very simple algorithm, by thinking outside the box. An algorithm that is not very complicated stands a better chance of being adopted by the community because it is easier to implement and easier for others to understand,” says senior author Cathy Wu, the Thomas D. and Virginia W. Cabot Career Development Associate Professor in Civil and Environmental Engineering (CEE) and the Institute for Data, Systems, and Society (IDSS), and a member of the Laboratory for Information and Decision Systems (LIDS).

She is joined on the paper by lead author Jung-Hoon Cho, a CEE graduate student; Vindula Jayawardana, a graduate student in the Department of Electrical Engineering and Computer Science (EECS); and Sirui Li, an IDSS graduate student. The research will be presented at the Conference on Neural Information Processing Systems.

Balancing Training Approaches

To train an algorithm to control traffic lights at many intersections in a city, an engineer would typically choose between two main approaches. She can train one algorithm for each intersection independently, using only that intersection’s data, or train a larger algorithm using data from all intersections and then apply it to each one.

But each approach comes with its share of downsides. Training a separate algorithm for each task (such as a given intersection) is a time-consuming process that requires an enormous amount of data and computation, while training one algorithm for all tasks often leads to subpar performance.

Wu and her collaborators sought a sweet spot between these two approaches.

Advantages of Model-Based Transfer Learning

For their method, they choose a subset of tasks and train one algorithm for each task independently. Importantly, they strategically select individual tasks that are most likely to improve the algorithm’s overall performance on all tasks.

They leverage a common trick from the reinforcement learning field called zero-shot transfer learning, in which an already trained model is applied to a new task without being further trained. With transfer learning, the model often performs remarkably well on the new neighbor task.

“We know it would be ideal to train on all the tasks, but we wondered if we could get away with training on a subset of those tasks, apply the result to all the tasks, and still see a performance increase,” Wu says.

MBTL Algorithm: Optimizing Task Selection

To identify which tasks they should select to maximize expected performance, the researchers developed an algorithm called Model-Based Transfer Learning (MBTL).

The MBTL algorithm has two pieces. For one, it models how well each algorithm would perform if it were trained independently on one task. Then it models how much each algorithm’s performance would degrade if it were transferred to each other task, a concept known as generalization performance.

Explicitly modeling generalization performance allows MBTL to estimate the value of training on a new task.

MBTL does this sequentially, choosing the task which leads to the highest performance gain first, then selecting additional tasks that provide the biggest subsequent marginal improvements to overall performance.

Since MBTL only focuses on the most promising tasks, it can dramatically improve the efficiency of the training process.

Implications for Future AI Development

When the researchers tested this technique on simulated tasks, including controlling traffic signals, managing real-time speed advisories, and executing several classic control tasks, it was five to 50 times more efficient than other methods.

This means they could arrive at the same solution by training on far less data. For instance, with a 50x efficiency boost, the MBTL algorithm could train on just two tasks and achieve the same performance as a standard method which uses data from 100 tasks.

“From the perspective of the two main approaches, that means data from the other 98 tasks was not necessary or that training on all 100 tasks is confusing to the algorithm, so the performance ends up worse than ours,” Wu says.

With MBTL, adding even a small amount of additional training time could lead to much better performance.

In the future, the researchers plan to design MBTL algorithms that can extend to more complex problems, such as high-dimensional task spaces. They are also interested in applying their approach to real-world problems, especially in next-generation mobility systems.

Reference: “Model-Based Transfer Learning for Contextual Reinforcement Learning” by Jung-Hoon Cho, Vindula Jayawardana, Sirui Li and Cathy Wu, 21 November 2024, Computer Science > Machine Learning.

arXiv:2408.04498

The research is funded, in part, by a National Science Foundation CAREER Award, the Kwanjeong Educational Foundation PhD Scholarship Program, and an Amazon Robotics PhD Fellowship.

News

How Everyday Plastics Quietly Turn Into DNA-Damaging Nanoparticles

The same unique structure that makes plastic so versatile also makes it susceptible to breaking down into harmful micro- and nanoscale particles. The world is saturated with trillions of microscopic and nanoscopic plastic particles, some smaller [...]

AI Outperforms Physicians in Real-World Urgent Care Decisions, Study Finds

The study, conducted at the virtual urgent care clinic Cedars-Sinai Connect in LA, compared recommendations given in about 500 visits of adult patients with relatively common symptoms – respiratory, urinary, eye, vaginal and dental. [...]

Challenging the Big Bang: A Multi-Singularity Origin for the Universe

In a study published in the journal Classical and Quantum Gravity, Dr. Richard Lieu, a physics professor at The University of Alabama in Huntsville (UAH), which is a part of The University of Alabama System, suggests that [...]

New drug restores vision by regenerating retinal nerves

Vision is one of the most crucial human senses, yet over 300 million people worldwide are at risk of vision loss due to various retinal diseases. While recent advancements in retinal disease treatments have [...]

Shingles vaccine cuts dementia risk by 20%, new study shows

A shingles shot may do more than prevent rash — it could help shield the aging brain from dementia, according to a landmark study using real-world data from the UK. A routine vaccine could [...]

AI Predicts Sudden Cardiac Arrest Days Before It Strikes

AI can now predict deadly heart arrhythmias up to two weeks in advance, potentially transforming cardiac care. Artificial intelligence could play a key role in preventing many cases of sudden cardiac death, according to [...]

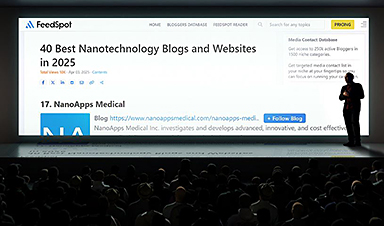

NanoApps Medical is a Top 20 Feedspot Nanotech Blog

There is an ocean of Nanotechnology news published every day. Feedspot saves us a lot of time and we recommend it. We have been using it since 2018. Feedspot is a freemium online RSS [...]

This Startup Says It Can Clean Your Blood of Microplastics

This is a non-exhaustive list of places microplastics have been found: Mount Everest, the Mariana Trench, Antarctic snow, clouds, plankton, turtles, whales, cattle, birds, tap water, beer, salt, human placentas, semen, breast milk, feces, testicles, [...]

New Blood Test Detects Alzheimer’s and Tracks Its Progression With 92% Accuracy

The new test could help identify which patients are most likely to benefit from new Alzheimer’s drugs. A newly developed blood test for Alzheimer’s disease not only helps confirm the presence of the condition but also [...]

The CDC buried a measles forecast that stressed the need for vaccinations

This story was originally published on ProPublica, a nonprofit newsroom that investigates abuses of power. Sign up to receive our biggest stories as soon as they’re published. ProPublica — Leaders at the Centers for Disease Control and Prevention [...]

Light-Driven Plasmonic Microrobots for Nanoparticle Manipulation

A recent study published in Nature Communications presents a new microrobotic platform designed to improve the precision and versatility of nanoparticle manipulation using light. Led by Jin Qin and colleagues, the research addresses limitations in traditional [...]

Cancer’s “Master Switch” Blocked for Good in Landmark Study

Researchers discovered peptides that permanently block a key cancer protein once thought untreatable, using a new screening method to test their effectiveness inside cells. For the first time, scientists have identified promising drug candidates [...]

AI self-cloning claims: A new frontier or a looming threat?

Chinese scientists claim that some AI models can replicate themselves and protect against shutdown. Has artificial intelligence crossed the so-called red line? Chinese researchers have published two reports on arXiv claiming that some artificial [...]

New Drug Turns Human Blood Into Mosquito-Killing Weapon

Nitisinone, a drug for rare diseases, kills mosquitoes when present in human blood and may become a new tool to fight malaria, offering longer-lasting, environmentally safer effects than ivermectin. Controlling mosquito populations is a [...]

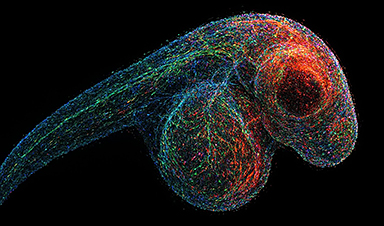

DNA Microscopy Creates 3D Maps of Life From the Inside Out

What if you could take a picture of every gene inside a living organism—not with light, but with DNA itself? Scientists at the University of Chicago have pioneered a revolutionary imaging technique called volumetric DNA microscopy. It builds [...]

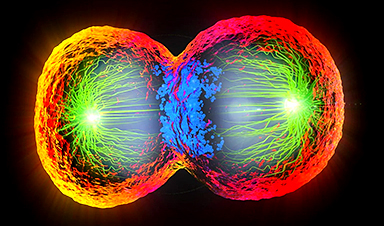

Scientists Just Captured the Stunning Process That Shapes Chromosomes

Scientists at EMBL have captured how human chromosomes fold into their signature rod shape during cell division, using a groundbreaking method called LoopTrace. By observing overlapping DNA loops forming in high resolution, they revealed that large [...]