In 2023, AI policy and regulation went from a niche, nerdy topic to front-page news. This is partly thanks to OpenAI’s ChatGPT, which helped AI go mainstream, but which also exposed people to how AI systems work—and don’t work. It has been a monumental year for policy: we saw the first sweeping AI law agreed upon in the European Union, Senate hearings and executive orders in the US, and specific rules in China for things like recommender algorithms.

If 2023 was the year lawmakers agreed on a vision, 2024 will be the year policies start to morph into concrete action. Here’s what to expect.

The United States

AI really entered the political conversation in the US in 2023. But it wasn’t just debate. There was also action, culminating in President Biden’s executive order on AI at the end of October—a sprawling directive calling for more transparency and new standards.

Through this activity, a US flavor of AI policy began to emerge: one that’s friendly to the AI industry, with an emphasis on best practices, a reliance on different agencies to craft their own rules, and a nuanced approach of regulating each sector of the economy differently.

Next year will build on the momentum of 2023, and many items detailed in Biden’s executive order will be enacted. We’ll also be hearing a lot about the new US AI Safety Institute, which will be responsible for executing most of the policies called for in the order.

From a congressional standpoint, it’s not clear what exactly will happen. Senate Majority Leader Chuck Schumer recently signaled that new laws may be coming in addition to the executive order. There are already several legislative proposals in play that touch various aspects of AI, such as transparency, deepfakes, and platform accountability. But it’s not clear which, if any, of these already proposed bills will gain traction next year.

What we can expect, though, is an approach that grades types and uses of AI by how much risk they pose—a framework similar to the EU’s AI Act. The National Institute of Standards and Technology has already proposed such a framework that each sector and agency will now have to put into practice, says Chris Meserole, executive director of the Frontier Model Forum, an industry lobbying body.

Another thing is clear: the US presidential election in 2024 will color much of the discussion on AI regulation. As we see in generative AI’s impact on social media platforms and misinformation, we can expect the debate around how we prevent harms from this technology to be shaped by what happens during election season.

Europe

The European Union has just agreed on the AI Act, the world’s first sweeping AI law.

After intense technical tinkering and official approval by European countries and the EU Parliament in the first half of 2024, the AI Act will kick in fairly quickly. In the most optimistic scenario, bans on certain AI uses could apply as soon as the end of the year.

This all means 2024 will be a busy year for the AI sector as it prepares to comply with the new rules. Although most AI applications will get a free pass from the AI Act, companies developing foundation models and applications that are considered to pose a “high risk” to fundamental rights, such as those meant to be used in sectors like education, health care, and policing, will have to meet new EU standards. In Europe, the police will not be allowed to use the technology in public places, unless they get court approval first for specific purposes such as fighting terrorism, preventing human trafficking, or finding a missing person.

Other AI uses will be entirely banned in the EU, such as creating facial recognition databases like Clearview AI’s or using emotion recognition technology at work or in schools. The AI Act will require companies to be more transparent about how they develop their models, and it will make them, and organizations using high-risk AI systems, more accountable for any harms that result.

Companies developing foundation models—the models upon which other AI products, such as GPT-4, are based—will have to comply with the law within one year of the time it enters into force. Other tech companies have two years to implement the rules.

To meet the new requirements, AI companies will have to be more thoughtful about how they build their systems, and document their work more rigorously so it can be audited. The law will require companies to be more transparent about how their models have been trained and will ensure that AI systems deemed high-risk are trained and tested with sufficiently representative data sets in order to minimize biases, for example.

The EU believes that the most powerful AI models, such as OpenAI’s GPT-4 and Google’s Gemini, could pose a “systemic” risk to citizens and thus need additional work to meet EU standards. Companies must take steps to assess and mitigate risks and ensure that the systems are secure, and they will be required to report serious incidents and share details on their energy consumption. It will be up to companies to assess whether their models are powerful enough to fall into this category.

Open-source AI companies are exempted from most of the AI Act’s transparency requirements, unless they are developing models as computing-intensive as GPT-4. Not complying with rules could lead to steep fines or cause their products to be blocked from the EU.

The EU is also working on another bill, called the AI Liability Directive, which will ensure that people who have been harmed by the technology can get financial compensation. Negotiations for that are still ongoing and will likely pick up this year.

Some other countries are taking a more hands-off approach. For example, the UK, home of Google DeepMind, has said it does not intend to regulate AI in the short term. However, any company outside the EU, the world’s second-largest economy, will still have to comply with the AI Act if it wants to do business in the trading bloc.

Columbia University law professor Anu Bradford has called this the “Brussels effect”—by being the first to regulate, the EU is able to set the de facto global standard, shaping the way the world does business and develops technology. The EU successfully achieved this with its strict data protection regime, the GDPR, which has been copied everywhere from California to India. It hopes to repeat the trick when it comes to AI.

China

So far, AI regulation in China has been deeply fragmented and piecemeal. Rather than regulating AI as a whole, the country has released individual pieces of legislation whenever a new AI product becomes prominent. That’s why China has one set of rules for algorithmic recommendation services (TikTok-like apps and search engines), another for deepfakes, and yet another for generative AI.

The strength of this approach is it allows Beijing to quickly react to risks emerging from the advances in technology—both for the users and for the government. But the problem is it prevents a more long-term and panoramic perspective from developing.

That could change next year. In June 2023, China’s state council, the top governing body, announced that “an artificial intelligence law” is on its legislative agenda. This law would cover everything—like the AI Act for Europe. Because of its ambitious scope, it’s hard to say how long the legislative process will take. We might see a first draft in 2024, but it might take longer. In the interim, it won’t be surprising if Chinese internet regulators introduce new rules to deal with popular new AI tools or types of content that emerge next year.

So far, very little information about it has been released, but one document could help us predict the new law: scholars from the Chinese Academy of Social Sciences, a state-owned research institute, released an “expert suggestion” version of the Chinese AI law in August. This document proposes a “national AI office” to oversee the development of AI in China, demands a yearly independent “social responsibility report” on foundation models, and sets up a “negative list” of AI areas with higher risks, which companies can’t even research without government approval.

Currently, Chinese AI companies are already subject to plenty of regulations. In fact, any foundation model needs to be registered with the government before it can be released to the Chinese public (as of the end of 2023, 22 companies have registered their AI models).

This means that AI in China is no longer a Wild West environment. But exactly how these regulations will be enforced remains uncertain. In the coming year, generative-AI companies will have to try to figure out the compliance reality, especially around safety reviews and IP infringement.

At the same time, since foreign AI companies haven’t received any approval to release their products in China (and likely won’t in the future), the resulting domestic commercial environment protects Chinese companies. It may help them gain an edge against Western AI companies, but it may also stifle competition and reinforcing China’s control of online speech.

The rest of the world

We’re likely to see more AI regulations introduced in other parts of the world throughout the next year. One region to watch will be Africa. The African Union is likely to release an AI strategy for the continent early in 2024, meant to establish policies that individual countries can replicate to compete in AI and protect African consumers from Western tech companies, says Melody Musoni, a policy officer at the European Centre for Development Policy Management.

Some countries, like Rwanda, Nigeria, and South Africa, have already drafted national AI strategies and are working to develop education programs, computing power, and industry-friendly policies to support AI companies. Global bodies like the UN, OECD, G20, and regional alliances have started to create working groups, advisory boards, principles, standards, and statements about AI. Groups like the OECD may prove useful in creating regulatory consistency across different regions, which could ease the burden of compliance for AI companies.

Geopolitically, we’re likely to see growing differences between how democratic and authoritarian countries foster—and weaponize—their AI industries. It will be interesting to see to what extent AI companies prioritize global expansion or domestic specialization in 2024. They might have to make some tough decisions.

News

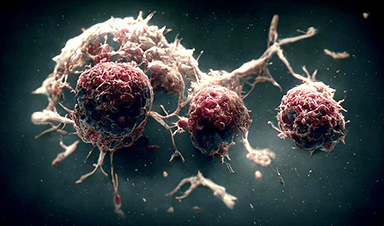

Scientists Melt Cancer’s Hidden “Power Hubs” and Stop Tumor Growth

Researchers discovered that in a rare kidney cancer, RNA builds droplet-like hubs that act as growth control centers inside tumor cells. By engineering a molecular switch to dissolve these hubs, they were able to halt cancer [...]

Platelet-inspired nanoparticles could improve treatment of inflammatory diseases

Scientists have developed platelet-inspired nanoparticles that deliver anti-inflammatory drugs directly to brain-computer interface implants, doubling their effectiveness. Scientists have found a way to improve the performance of brain-computer interface (BCI) electrodes by delivering anti-inflammatory drugs directly [...]

After 150 years, a new chapter in cancer therapy is finally beginning

For decades, researchers have been looking for ways to destroy cancer cells in a targeted manner without further weakening the body. But for many patients whose immune system is severely impaired by chemotherapy or radiation, [...]

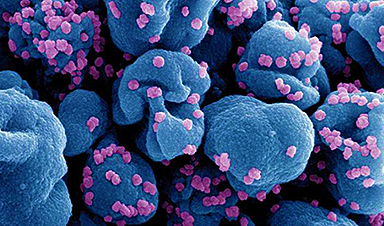

Older chemical libraries show promise for fighting resistant strains of COVID-19 virus

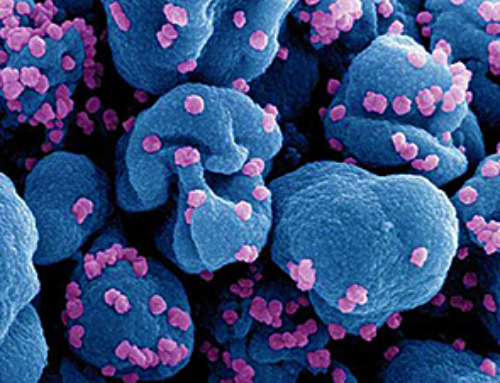

SARS‑CoV‑2, the virus that causes COVID-19, continues to mutate, with some newer strains becoming less responsive to current antiviral treatments like Paxlovid. Now, University of California San Diego scientists and an international team of [...]

Lower doses of immunotherapy for skin cancer give better results, study suggests

According to a new study, lower doses of approved immunotherapy for malignant melanoma can give better results against tumors, while reducing side effects. This is reported by researchers at Karolinska Institutet in the Journal of the National [...]

Researchers highlight five pathways through which microplastics can harm the brain

Microplastics could be fueling neurodegenerative diseases like Alzheimer's and Parkinson's, with a new study highlighting five ways microplastics can trigger inflammation and damage in the brain. More than 57 million people live with dementia, [...]

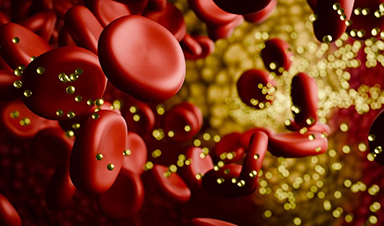

Tiny Metal Nanodots Obliterate Cancer Cells While Largely Sparing Healthy Tissue

Scientists have developed tiny metal-oxide particles that push cancer cells past their stress limits while sparing healthy tissue. An international team led by RMIT University has developed tiny particles called nanodots, crafted from a metallic compound, [...]

Gold Nanoclusters Could Supercharge Quantum Computers

Researchers found that gold “super atoms” can behave like the atoms in top-tier quantum systems—only far easier to scale. These tiny clusters can be customized at the molecular level, offering a powerful, tunable foundation [...]

A single shot of HPV vaccine may be enough to fight cervical cancer, study finds

WASHINGTON -- A single HPV vaccination appears just as effective as two doses at preventing the viral infection that causes cervical cancer, researchers reported Wednesday. HPV, or human papillomavirus, is very common and spread [...]

New technique overcomes technological barrier in 3D brain imaging

Scientists at the Swiss Light Source SLS have succeeded in mapping a piece of brain tissue in 3D at unprecedented resolution using X-rays, non-destructively. The breakthrough overcomes a long-standing technological barrier that had limited [...]

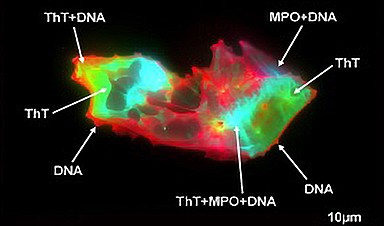

Scientists Uncover Hidden Blood Pattern in Long COVID

Researchers found persistent microclot and NET structures in Long COVID blood that may explain long-lasting symptoms. Researchers examining Long COVID have identified a structural connection between circulating microclots and neutrophil extracellular traps (NETs). The [...]

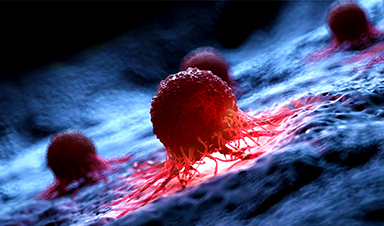

This Cellular Trick Helps Cancer Spread, but Could Also Stop It

Groups of normal cbiells can sense far into their surroundings, helping explain cancer cell migration. Understanding this ability could lead to new ways to limit tumor spread. The tale of the princess and the [...]

New mRNA therapy targets drug-resistant pneumonia

Bacteria that multiply on surfaces are a major headache in health care when they gain a foothold on, for example, implants or in catheters. Researchers at Chalmers University of Technology in Sweden have found [...]

Current Heart Health Guidelines Are Failing To Catch a Deadly Genetic Killer

New research reveals that standard screening misses most people with a common inherited cholesterol disorder. A Mayo Clinic study reports that current genetic screening guidelines overlook most people who have familial hypercholesterolemia, an inherited disorder that [...]

Scientists Identify the Evolutionary “Purpose” of Consciousness

Summary: Researchers at Ruhr University Bochum explore why consciousness evolved and why different species developed it in distinct ways. By comparing humans with birds, they show that complex awareness may arise through different neural architectures yet [...]

Novel mRNA therapy curbs antibiotic-resistant infections in preclinical lung models

Researchers at the Icahn School of Medicine at Mount Sinai and collaborators have reported early success with a novel mRNA-based therapy designed to combat antibiotic-resistant bacteria. The findings, published in Nature Biotechnology, show that in [...]